Did CHOs make us human? I doubt it

“I like to start with an evolutionary perspective” — Jennie Brand-Miller

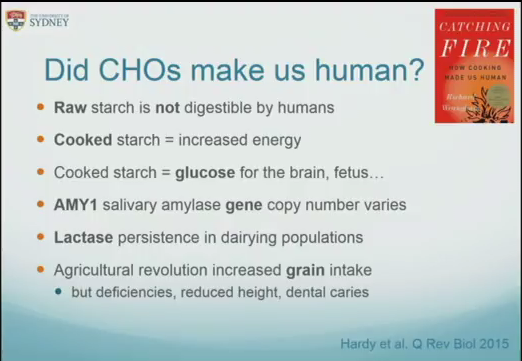

Today at the Food for Thought Conference, Jennie Brand-Miller argued that dependence on exogenous glucose played a critical role in our evolution. I and others disagree for several reasons. Let’s look at the main arguments Brand-Miller put forward in support of exogenous glucose.

- The brain requires a lot of energy

- The brain runs on glucose

- The need for dietary glucose is particularly acute in fetuses

- The cooking of starch allowed us to get that energy

- Some modern HGs make significant use of exogenous glucose

- We have many more copies of amylase than other primates

- Some of us have developed persistent lactase

- We are hard wired to love sweetness

Yes, the brain requires a lot of energy; no it does not have to come from dietary glucose

I agree wholeheratedly that our brains require a lot of energy, much more than other organs, and that our needs are many times more acute than in other primates. Getting this energy was critical for our evolution. However, the idea that the brain “runs” on glucose, and that this shows a requirement for exogenous glucose is incorrect, and omits well-known evidence.

First, our bodies are capable of synthesising enough glucose in the absence of dietary sources to fulfill the most conservative estimates of requirements. As conceded early in the presentation, we are known to be able survive without exogenous glucose. If we could not supply our brain needs in this way, this would simply not be possible. That glucose is mostly synthesised out of protein, and the process is called gluconeogenesis. This fact alone is enough to render this argument irrelevant, but there is more. In the situation for which no dietary glucose is provided, not only can we still make enough glucose endogenously to meet those needs, but in practice what happens is that our needs are different. Instead of running primarily on glucose, our brains metabolism runs mostly on ketone bodies, and uses glucose for only a small portion of its needs, far less than our capacity to generate it.

Fetal and infant growth does not depend on dietary glucose

Brand-Miller also insists that “The fetus grows on the mother’s maternal blood glucose.”, as if this should settle the matter once and for all. However, she neglects to mention that fetuses make extensive use of ketones. I’ve covered infant brain growth and the importance of ketone bodies in this context several times, so I won’t go into it here. See Babies thrive under a ketogenic metabolism, Meat is best for growing brains, What about the sugars in breast milk?, and Optimal Weaning from an Evolutionary Perspective, for fully referenced discussion of the fuel used by growing babies. In any case, maternal blood glucose is maintained just fine without dietary sources, so even if babies did not use ketones, the point would be moot.

The evolutionary argument

Since our brain energy needs are met perfectly well with either a high glucose intake or a low glucose intake, it cannot be reasonable argued that our large brains must have developed under conditions of high glucose intake. There are still at this point two equally plausible evolutionary hypotheses that would enable the evolutionary development of large brains: increased consumption of exogenous glucose, and increased consumption of exogenous fat. An increase in both is also a plausible hypothesis, either together or in alternation. For simplicity, let’s start by considering one state or the other as being the predominant evolved state. Let’s review what evolutionary circumstances would be required for each hypothesis, and what other circumstances would support it without being necessary. Then we can see what evidence we have for those circumstances.

Persistent adequate availability of the predominant energy source and essential micronutrients

- For the exogenous glucose condition to have been the predominant evolved state, we would have required a consistent source of exogenous glucose on a regular basis, year round, for multiple generations.

- For the endogenous glucose condition to have been the predominant evolved state, we would have required a consistent source of exogenous fat and protein on a regular basis, for multiple generations.

The reason we would need fat, and not just protein in the gluconeogenesis case, is that we are limited in our ability to metabolise protein. Protein is better conceived of as a mainly a micronutrient, rather than a macronutrient, because of its structural importance. Besides water, our bodies are primarily made of amino acids and fatty acids. This is one reason why when we rely on gluconeogenesis for all of our glucose needs, we also have reduced glucose needs. It spares protein for more important things.

Protein availability is also of crucial importance even for the exogenous glucose hypothesis, because it is still a fundamental nutritional need outside of energy requirements.

Beyond protein, we would need to supply all of the nutrients that proper brain development requires. These include the minerals iodine, selenium, iron, zinc, vitamins B12, A, and D, and the vitamin-like choline, and the fatty acids DHA and arachadonic acid. Note that of the minerals listed here, animal sources are much more bioavailable, and that plant sources contain substances that actively interfere with absorption. Of the vitamins and fatty acids, one (B12) is not available even in precursor form, and the others only in precursor form. Humans are known to have low and variable ability to synthesise the necessary components out of precursors. It is generally agreed upon in the scientific community that because of these hard requirements of the brain, a significant level of animal sourced food must have been part of our evolutionary heritage. This is supported by the absence of evidence of a single indigenous society that did not include some form of animal sourced food.

If the energy source were dietary glucose, we would require:

- A year round, abundant source of glucose coming from

- starchy tubers AND widespread use of cooking

AND/OR - sugar in the form of low fibre fruit and honey

The evidence for cooked tubers is weak at best. First, tubers in existence were seasonal and limited in supply. Second, tubers in existence were highly fibrous, much more so than today’s bred varieties, so they didn’t yield much [1]. The wild type still used by the Hadza, for example, have a low yield of glucose even when cooked. According to Schnorr, who studied this, “When roasted, they produce more energy, although the difference is not great. In the real world, the additional calorie content is probably lower than the cost of making a fire.” (https://www.universiteitleiden.nl/en/news/2016/03/roasting-turnips-for-science)Third, there is little evidence to support access to fire in the time period in question. The most avid advocate of this theory, Wrangham (whose book cover is featured on the slide), must resort to theorising that fire was available and widespread long before current evidence supports [1]. His date estimates for use of fire were developed through backwards reasoning based on the assumption that starch was the evolutionary reason for our brains! Since the only way would could have eaten starch was by cooking, and since our brains changed around 2 million years ago, Wrangham then infers that we must have had the use of fire at that time [2]. While we cannot dispute the theory based on absence of evidence, it does remain theoretical, and is less probable given that we would expect to have found at least some such by now.As to evidence for or against fruit and honey, this is an unlikely year round source of energy in the evolved environment, particularly during ice ages, and harvest time would not complement tubers. The so-called fruit based diet of other primates, is actually a fibre based diet, (i.e. a fibre-derived fat based diet) as Miki Ben-dor has shown quantitatively. That’s because as in the case of tubers, wild fruits were mainly fibre.

This points to the drastic difference in digestive systems between humans and our closest relatives. They clearly have extensive ability for hindgut fermentation, and we clearly do not. If fruits were a major source of energy for humans, it would not have been the fibrous ones our primate cousins eat. There is no coherent continuity argument available from that perspective.

- starchy tubers AND widespread use of cooking

- In this scenario we would also still require animal sourced food for protein and other micronutrients, as discussed above.

If the energy source were dietary fat and protein, we would require:

A year round, abundant source of fat and protein from

- hunted or scavenged game

AND/OR

- nuts

Evidence for at least some hunted or scavenged game is well supported in various ways. We have direct evidence of hunting and scavenging going back long before evidence of fire, in the form of tools and bones. Unlike in the case of tubers, cooking is not necessary to obtain nutrients and energy from meat and animal fat. Because of our protein and nutrient requirements, we would already have to be eating animal sourced food anyway, regardless of our source of energy.

If the meat were lean, this would not by itself solve the energy problem. However, evidence suggests that the game available during the time in question was much fattier megafauna than modern leaner game [3]. Insofar as this is true, it seems a stretch to suggest that early humans would have hunted game that met their protein and nutrient requirements, and then discarded an abundant energy source that came right along with it in favour of tubers. Moreover, evidence suggests that our meat eating began with scavenging bones and skulls from other carnivorous kills, eating some scraps of meat, but primarily the marrow and high-fat brains we were able to crack our way into.

In fact, the diversity of human diet after the extinction of the megafauna can be viewed as a variety of adaptations to the loss of our evolved diet, rather than evidence that we evolved to eat exactly like any one of them in particular. This brings us to the supplemental arguments from Brand-Miller.

What can we conclude from the diets of modern hunter-gatherer societies?

It seems disingenuous to cite the Hadza, the society with the highest reported carbohydrate intake as evidence that we need carbohydrates to thrive. There are several known indigenous peoples or other groups that subsisted on very low exogenous carbohydrate levels before introduced to wheat and sugar. This includes Mongolians, Plains Indians, Brazilian gauchos, Arctic peoples, and Maasai. The very existence of these societies contradicts the thesis. However, none of them, high carbohydrate or low, should be used as demonstration of a particular evolved way of eating. They each show a way that is viable in the given environment. Carbohydrate can be used as a primary energy source and so can fat. But this is not enough to show which, if any, was primary during the time our brains evolved to make us anatomically modern.

Why do we have more copies of amylase than other primates?

On average we have more copies of AMY1 than other primates. Brand-Miller claims that our number of copies of salivary amylase genes have changed because of our dietary intake of dietary carbohydrates. This is a hypothesis. Fernandez and Wiley recently discussed several problematic inconsistencies in this hypothesis [4], including the high variability in every population, the fact that starch digestion isn’t materially affected by salivary amylase, and the existence of alternative possible functions of the gene. I have touched on the apparent relationship to stress in a previous post (Science Fiction).

Some populations have extended lactase production

I think this supports more use of animal based nutrition than a need for sugar in particular.

Hard wired to love sweetness

The hard-wired response to the taste of sweet is important and interesting, but I think it shows that sugar was rare, not a staple.

- We crave sweets. Craving is different from hunger. Craving indicates a different kind of reward mechanism than one based on need. It is intensified by intermittent availability and scarcity.

- Relatedly, there seems to be no satiety mechanism for sweet. This is in stark contrast to protein and fat, both of which induce satiety. To me this indicates precisely that the environmental availability of sugar and starch was limited. If it were unlimited, we would have had to develop internal responses to maintain homeostasis. As it is, it argues for seasonal gorge opportunities at best.

- The body has limited ability to store glucose. If glucose were the fuel of choice, it seems likely that we would have benefitted from expanded glycogen storage. Instead what we have is fat storage. We can afford to overeat sugar if we store it as fat, and then use the fat as fuel over time. The only way we can use fat as fuel is if we have stopped eating glucose for a significant period.

Evidence supporting endogenous glucose rather than exogenous as our evolved default

In contrast to other species we have studied, humans stay in ketosis even when they have substantially more protein than basic needs require. Like many species, in the absence of significant dietary carbohydrate, when our protein or caloric needs are not met, we produce ketones to spare protein and provide non-glucose energy, and we change our metabolisms to require less glucose. Once protein is sufficient, though, other species will go back to the glucose based metabolism. Humans do not. Humans, apparently uniquely continue in ketogenic mode until and unless so much protein is ingested that the amount being metabolised is resulting in so much glucose that it has to be stored. This suggests that humans had an evolutionary timespan in which access to protein and fat was consistently high and carbohydrate was low for at least long periods. I review the evidence for this here: Ketosis Without Starvation: the human advantage

Summary

Brand-Miller’s evolutionary arguments that dietary carbohydrate was the fuel that allowed us to grow our large brains

- Ignores our capacity for exogenous glucose and ketone body supply

- Relies on doubtful claims about the ubiquitous availability of sufficient starch and sugar as well as widespread use of fire at the time in question

- Presumes that the animal fat we are known to have procured was not a sufficient source of energy

- Makes incorrect assumptions about fuel sources in other primates

- Cherry picks from modern hunter-gatherer societies only those that support, but not those that refute the claim that dietary glucose is necessary

- Conflates “glucose is sufficient” with “glucose is necessary” for supplying brain energy

- Evinces poor understanding about fetal and perinatal fuel supply

The available evidence supports at best a seasonally alternating system of glucose and animal fat reliance for brain energy, and does not refute a long-term evolutionary adaptation for little and infrequent dietary glucose.

References

1. From the ape’s dilemma to the weanling’s dilemma: early weaning and its evolutionary context. Kennedy GE. J Hum Evol. 2005 Feb;48(2):123-45. Epub 2005 Jan 18.

“Although some researchers have claimed that plant foods (e.g., roots and tubers) may have played an important role in human evolution (e.g., O’Connell et al., 1999; Wrangham et al., 1999; Conklin-Brittain et al., 2002), the low protein content of ‘‘starchy’’ plants, generally calculated as 2% of dry weight (see Kaplan et al., 2000: table 2), low calorie and fat content, yet high content of (largely) indigestible fiber (Schoeninger et al., 2001: 182) would render them far less than ideal weaning foods. Some plant species, moreover, would require cooking to improve their digestibility and, despite claims to the contrary (Wrangham et al., 1999), evidence of controlled fire has not yet been found at Plio-Pleistocene sites. Other plant foods, such as the nut of the baobab (Adansonia digitata), are high in protein, calories, and lipids and may have been exploited by hominoids in more open habitats (Schoeninger et al., 2001). However, such foods would be too seasonal or too rare on any particular landscape to have contributed significantly and consistently to the diet of early hominins. Moreover, while young baobab seeds are relatively soft and may be chewed, the hard, mature seeds require more processing. The Hadza pound these into flour (Schoeninger et al., 2001), which requires the use of both grinding stones and receptacles, equipment that may not have been known to early hominins. Meat, on the other hand, is relatively abundant and requires processing that was demonstrably within the technological capabilities of Plio-Pleistocene hominins. Meat, particularly organ tissues, as Bogin (1988, 1997) pointed out, would provide the ideal weaning food.”

2. Toward a Long Prehistory of Fire. Michael Chazan Current Anthropology 2017 58:S16, S351-S359

“This article explores a conception of the origins of fire as a process of shifting human interactions with fire, a process that, in a sense, still continues today. This is a counterpoint to the dominant narrative that envisions a point of “discovery” or “invention” for fire. Following a discussion about what fire is and how it articulates with human society, I propose a potential scenario for the prehistory of fire, consisting of three major stages of development. From this perspective, obligate cooking developed gradually in the course of human evolution, with full obligate cooking emerging subsequent to modern humans rather than synchronous with the appearance of Homo erectus as envisioned by the cooking hypothesis.”

…

“Wrangham and his collaborators work from the observation “that present-day humans cannot extract sufficient energy from uncooked wild diets” (Carmody et al. 2016:1091). From this observation, the logical inference is that obligate cooking must have a point of origin in hominin phylogeny. This point of origin is then mapped onto the increase in hominin brain and body size ca. 2 million years ago, leading to the proposition that obligate cooking began with Homo erectus and was a characteristic of subsequent taxa on the hominin lineage. The power of the approach taken by the cooking hypothesis is that it is at least partially testable based on experimental studies on the physiological and molecular correlates of consumption of cooked food (see literature cited in Carmody et al. 2016; Wrangham 2017). However, this approach also has a number of shortcomings. First, while obligate cooking necessarily must have a point of phylogenetic origin, the same is not true for cooking that might become integrated into hominin adaptation through a process rather than as the result of a single event. Second, while many aspects of human obligate cooking can be experimentally tested, there is no current method for testing whether H. erectus required regular cooked food. In fact, the placement of the onset of obligate cooking at 2 million years ago is not directly testable without recourse to the archaeological record (including recovery of residues from fossils; see Hardy et al. 2017).”

3. Linking Top-down Forces to the Pleistocene Megafaunal Extinctions William J. Ripple and Blaire Van Valkenburgh BioScience (July/August 2010) 60 (7): 516-526.

“Humans are well-documented optimal foragers, and in general, large prey (ungulates) are highly ranked because of the greater return for a given foraging effort. A survey of the association between mammal body size and the current threat of human hunting showed that large-bodied mammals are hunted significantly more than small-bodied species (Lyons et al. 2004). Studies of Amazonian Indians (Alvard 1993) and Holocene Native American populations in California (Broughton 2002, Grayson 2001) show a clear preference for large prey that is not mitigated by declines in their abundance. After studying California archaeological sites spanning the last 3.5 thousand years, Grayson (2001) reported a change in relative abundance of large mammals consistent with optimal foraging theory: The human hunters switched from large mammal prey (highly ranked prey) to small mammal prey (lower-ranked prey) over this time period (figure 7). Grayson (2001) stated that there were no changes in climate that correlate with the nearly unilinear decline in the abundance of large mammals. Looking further back in time, Stiner and colleagues (1999) described a shift from slow-moving, easily caught prey (e.g., tortoises) to more agile, difficult-to-catch prey (e.g., birds) in Mediterranean Pleistocene archaeological sites, presumably as a result of declines in the availability of preferred prey.”

4. Rethinking the starch digestion hypothesis for AMY1 copy number variation in humans. Fernández CI, Wiley AS. Am J Phys Anthropol. 2017 Aug;163(4):645-657. doi: 10.1002/ajpa.23237.

“Although it certainly seems that a-amylase and increased AMY1/AMY2 CN are related to starchy diets in some primates and domestic dogs, we conclude that at present there is insufficient evidence supporting enhanced starch digestion as the primary adaptive function for high AMY1 CN in humans. Existing claims for this function are based on the assumption that salivary a-amylase plays a crucial role in extracting glucose from plant foods. Alpha-amylase’ s role in starch digestion is limited to the first (and nonessential) step, while other digestive enzymes and transport molecules are critical for starch digestion and glucose absorption. Specifically, it is assumed that glucose is a major product of a-amylase action, and disregard the essential rate-limiting action of maltase-glucoamylase and sucrase isomaltase enzymes in whole starch hydrolysis. It may be that the early phase of starch digestion is important in some other way, or interacts in complex ways with these other brush border enzymes. Thus, far there is no evidence that there has been selection on the genes for these other enzymes in humans. We show that a-amylase has alternative potential roles in humans, but find that there is insufficient evidence to fully evaluate their adaptive significance at present. AMY1 and AMY2 are widely distributed across diverse life forms; their ancient origin and conservation suggest that they play crucial roles in organismal fitness, but these are not well described, especially among mammals. It could also be that higher AMY1 copies play no adaptive role, but have waxed and waned in copy number without strong selection favoring or disfavoring them. In this regard, Iskow and colleagues (2012) indicate that although several examples of CNV at coding regions show signals of positive selection, it remains unclear whether these examples represent a pattern for CNV in humans. Alternatively, it is suggested that most CNVs across the human genome may have evolved under neutrality due to the existence of “hotspots” for CNV (Cooper, Nickerson, & Eichler, 2007; Perry et al., 2006) and the fact that CNV in the genome of healthy individuals contain thousands of these variants with weak or no phenotypically significant effect (Cooper et al., 2007). More research is necessary to understand the evolutionary significance of high CN and CNV in AMY1 in human populations.”